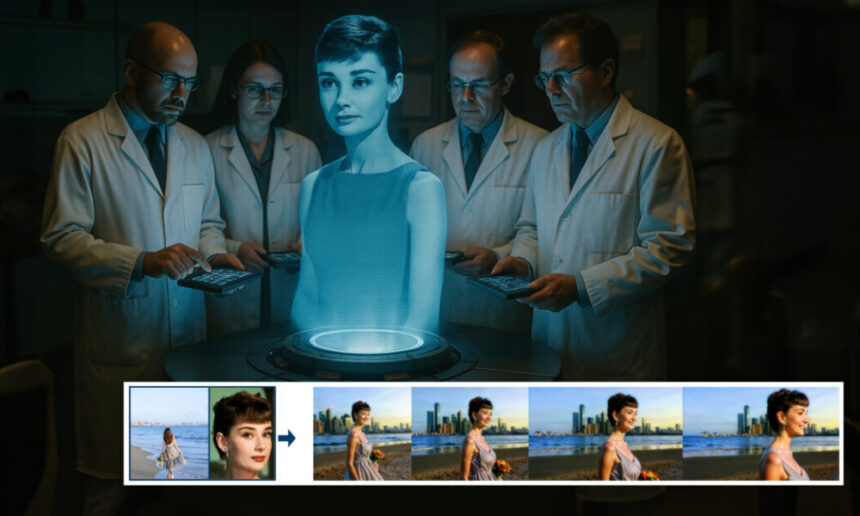

Video foundation models such as Hunyuan and WAN 2.1 are powerful, but do not provide users with the granularity control required by film and television production (particularly VFX production).

Professional visual effects studios are typically used with open source models like these and previous image-based (rather than video) models such as stable diffusion, Kandinsky, and Flux, along with a variety of support tools that adapt raw output to meet specific creative needs. When the director says, “It looks great, but can we make a little more (n)?” You cannot respond by saying that the model is not accurate enough to handle such requests.

Instead, the AI VFX team will use a range of traditional CGI and compositional techniques, alliances with custom procedures and workflows developed over time, in order to push the limits of video integration a little more.

So, by analogy, the foundation video model is very similar to the default installation of a web browser like Chrome. It’s a lot to get out of the box, but not the other way around, and if you want to adapt to your needs, you’ll need some plugins.

Control freaks

In the world of diffusion-based image synthesis, the most important third-party system like this is the control net.

ControlNet is a technique for adding structured controls to a diffusion-based generation model, allowing users to guide image or video generation using additional inputs such as edge maps, depth maps, and pose information.

Various methods in ControlNet allow for depth > image (top row), semantic segmentation > image (bottom left), and human and animal pose guide image generation (bottom left).

Instead of relying solely on text prompts, ControlNet introduces separate neural network branches. adapterProcess these conditioning signals while maintaining the base model generation capabilities.

This allows for fine-tuned outputs that adhere closely to user specifications, making them particularly useful in applications that require precise configuration, structure, or motion control.

Take a guide pose and you can get a variety of accurate output types via ControlNet. Source: https://arxiv.org/pdf/2302.05543

However, this kind of adapter-based framework works externally with a series of neural processes that are very internally focused. These approaches have several drawbacks.

First, the adapter is trained independently, Branch competition If multiple adapters are combined, this can result in poor production quality.

Secondly, they introduce Parameter redundancyeach adapter requires additional calculations and memory, making scaling inefficient.

Third, despite its flexibility, adapters are often generated Semi-optimal The results are compared to models that are completely fine-tuned for multi-condition generation. These issues make adapter-based methods less effective for tasks that require seamless integration of multiple control signals.

Ideally, ControlNet capacity will be trained Native The models include simultaneous video/audio generation, or native lip-sync functionality (for external audio), in a modular way that can accommodate later and obvious innovations that are highly anticipated.

As it stands, all additional features represent either post-production tasks or non-native procedures that require you to navigate the closely portable and sensitive weights of the underlying model you are operating.

fulldit

This standoff brings new products from China. This assumes a system where control net style measures are burned directly into the generated video model during training, rather than being relegated to an afterthought.

From a new paper: the fullldit approach can incorporate identity imposition, depth, camera movement into the native generation, and any combination of these can be summoned at once. Source: https://arxiv.org/pdf/2503.19907

title fullditThe new approach fuses multitasking conditions such as identity transfer, depth mapping, and camera movement into the integrated part of the trained generated video model with the author creating a prototype training model on the project site and attaching video clips.

In the example below, look at a generation that incorporates camera movement, identity and text information (i.e., guides user text prompts).

Click to play. Examples of ControlNet style user inposition using only natively trained basic models. Source: https://fulldit.github.io/

Note that the authors propose experimentally trained models not as functional basic models, but as proof of concept for native text (T2V) and inter-image (I2V) models.

As there is no similar model of this kind yet, researchers have created a new benchmark entitled Full benchevaluates multitasking videos and asserts cutting-edge performance in similar tests devised against previous approaches. However, because the full bench is designed by the authors themselves, its objectivity has not been tested. The data set of 1,400 cases may be limited to broader conclusions.

Perhaps the most interesting aspect of the architecture proposed by the paper is the possibility of incorporating a new type of control. The author states:

“In this work, we explore only the control conditions for cameras, identity and depth information. We did not further explore other conditions and modalities such as audio, voice, point cloud, object bounding boxes, optical flows, etc., but Furudi’s design can seamlessly integrate other modalities with minimal architectural changes, but how to quickly and cost-effectively adapt existing models to new conditions and modalities remains an important question.

Researchers present Flurdit as a step forward in multitasking video generation, but should consider this new work to be based on existing architectures rather than introducing fundamentally new paradigms.

Nevertheless, Fulldit now exists alone (as far as I know) as a video foundation model with a “hard-coded” control net style facility. And it’s good to make sure the proposed architecture is also compatible with later innovations.

Click to play. Examples of user-controlled camera movement from the project site.

New paper titled Fulldit: A fully-focused multitasking video generation foundation modeland comes from nine researchers from Kuaishou Technology and China University in Hong Kong. The project page is here, and the new benchmark data is hugging your face.

method

The authors argue that Fulldit’s unified attention mechanism allows for stronger cross-modal representation learning by capturing both spatial and temporal relationships across conditions.

According to a new paper, Fulldit integrates multiple input conditions through a complete autocatalysis and converts them into a unified sequence. In contrast, the adapter-based model (leftmost above) uses separate modules for each input, leading to redundancy, contention and poor performance.

Unlike adapter-based setups that handle each input stream individually, this shared attention structure avoids branch conflicts and reduces parameter overhead. They also argue that the architecture can scale to new input types without major redesigns, and that the model schema shows signs that it generalizes to combinations of conditions that are not seen during training, such as linking camera movements with character identities.

Click to play. Example of ID generation from a project site.

In Fulldit’s architecture, all conditioning inputs, such as text, camera movement, identity, depth, etc., are first converted to a unified token format. These tokens are concatenated into a single long sequence processed through a stack of transformer layers using a complete auto-articulation. This approach follows previous works such as the Open Soraplan and the film gen.

This design allows the model to collaboratively learn temporal and spatial relationships in all conditions. Each transformer block operates throughout the sequence, allowing for dynamic interactions between modalities without relying on the individual modules of each input. Also, as recorded, the architecture is designed to be scalable, making it much easier to incorporate additional control signals in the future without major structural changes.

The power of 3

Fulldit converts each control signal into a standardized token format, allowing all conditions to be processed together in a unified attention framework. For camera movement, the model encodes a set of exogenous parameters, such as the position and orientation of each frame. These parameters are timestamped and projected into embeddings of vectors that reflect the temporal nature of the signal.

Identity information is treated differently because it is inherently spatial rather than temporal. This model uses an identity map that shows the characters in which part of each frame exists. These maps are split patcheach patch is projected into an embedding that captures the identity queue of the spatial, allowing the model to associate a specific area of the frame with a particular entity.

Depth is a spatio-temporal signal, and the model processes depth video by splitting it into 3D patches that span both space and time. These patches are embedded in a way that preserves the structure throughout the frame.

Once embedded, these conditional tokens (camera, identity, depth) are all concatenated into a single long sequence, allowing full rudits to be processed together using a complete autocatalyst. This shared representation allows the model to learn interactions with the model over time, without relying on an isolated processing stream.

Data and Testing

Fulldit’s training approach relied on selectively annotated datasets tailored to each conditioning type rather than having all conditions present simultaneously.

For text conditions, this initiative follows the structured captioning approach outlined in the Miradata project.

Video collection and annotation pipeline for Miradata projects. Source: https://arxiv.org/pdf/2407.06358

For camera movement, the Realestate10K dataset was the main data source due to the high quality root truth annotations of camera parameters.

However, the authors observed that training on static scene camera datasets such as Realestate10K tends to reduce dynamic objects and human movements in the generated video. To counter this, additional fine-tuning was performed using an internal dataset containing more dynamic camera movements.

The ID annotations were generated using a pipeline developed for the Conceptmaster project. This allows efficient filtering and extraction of finely granular identity information.

The Conceptmaster Framework is designed to address identity decoupling issues while maintaining concept fidelity with customized videos. Source: https://arxiv.org/pdf/2501.04698

Depth annotations were obtained from the Panda-70m dataset using the depth one.

Optimization with Data Orders

The author also implemented a progressive training schedule and introduced more challenging conditions Early stages of training Before a simpler task is added, make sure the model has obtained a robust representation. Training orders have been made Text In camera After that, the conditions identityand finally Depth,Easier tasks are generally introduced later, with fewer examples.

The author emphasizes the value of ordering workloads this way.

“We noted that during the pre-training stage, more difficult tasks require extended training time and need to be introduced earlier in the learning process. These challenging tasks include complex data distributions that are very different from the output video, and the models must be capable of accurately capturing and representing them.

Conversely, if you introduce a simpler task too early, it may prioritize the model to prioritize learning first, as it provides more immediate optimization feedback and prevents convergence of more challenging tasks. ”

Diagram of data training orders adopted by researchers. Red indicates a large amount of data.

Before the initial training, the final fine-tuning stage further improved the model, improving visual quality and motion dynamics. The training then followed that of the standard diffusion framework*: noise added to the potential of the video, and the embedded condition tokens, which are used as guidance to predict and eliminate it.

To effectively evaluate Fulldit and provide a fair comparison with existing methods, and in the absence of availability of other Appposite benchmarks, the authors introduced Full bencha curated benchmark suite consisting of 1,400 different test cases.

New Full Bench Benchmark Data Explorer instance. Source: https://huggingface.co/datasets/kwaivgi/fullbench

Each data point provided ground truth annotations for various conditioning signals. Camera movement, Identityand Depth.

metric

The authors evaluated Fulldit using 10 metrics covering five key aspects: text alignment, camera control, identity similarity, depth accuracy, and general video quality.

Text alignment was measured using clip similarity and evaluated via camera control Rotation error (Roterr),, Translation error (Transer), and Camera movement consistency (cammc) followed the approach of cami2v ( Cameractrl project).

Identy similarity was assessed using Dino-I and Clip-I and quantified the accuracy of depth control using mean absolute error (MAE).

Video quality was determined by three metrics from Miradata. Frame-level clip similarity for smoothness. Dynamics light flow-based movement distance. Laion-Aesthetic Scores for Visual Attraction.

training

The authors trained Fulldit using an internal (private) text-to-video spreading model containing approximately 1 billion parameters. They intentionally chose a modest parameter size to maintain fairness in comparison with previous methods and to ensure reproducibility.

Because the video training length and resolution were different, the authors standardized each batch by changing and padding the video to a common resolution, sampled 77 frames per sequence, and optimized the effectiveness of the training using the applied attention and loss mask.

Adam Optimizer was used at a learning speed of 1×10-5 64 NVIDIA H800 GPU clusters, totaling 5,120GB of VRAM (in the enthusiast synthesis community, consider it. 24GB RTX still considers the 3090 a luxury standard).

The model was trained in about 32,000 steps, incorporating up to three identities per video, with a camera condition of 20 frames and a depth condition of 21 frames, both being evenly sampled from a total of 77 frames.

For inference, the model generated the video at a resolution of 384 x 672 pixels (approximately 5 seconds at about 5 seconds) using a diffuse inference step of 50 and a guidance scale without a classifier of 5.

Previous Method

For camera-to-video evaluation, the authors compared Fulldit with MotionCtrl, Cameractrl, and Cami2V, and trained all models using the Realestate10K dataset to ensure consistency and fairness.

As comparable open source multipurpose models were not available in the Identity Conditional Generation, the models were benchmarked against the 1B parameter concept master model using the same training data and architecture.

For depth to video tasks, comparisons were made using Ctrl-Adapter and ControlVideo.

Quantitative results of single-task video generation. Fulldit was compared to MotionCtrl, Cameractrl, and Cami2V for camera-to-video generation. Identity-to-Video Conceptmaster (1b parameter version). Ctrl-Adapter and ControlVideo from depth to video. All models were evaluated using the default settings. For consistency, 16 frames were uniformly sampled from each method, matching the output length of the previous model.

The results show that Fulldit achieved cutting-edge performance with metrics related to text, camera movement, identity, and depth control despite processing multiple conditioning signals simultaneously.

In overall quality metrics, the system was generally better than the rest of the methods, but its smoothness was slightly lower than the smoothness of the concept master. Here the author comments:

‘Fulldit’s smoothness is slightly lower than the smoothness of the concept master, as the smoothness calculation is based on the similarity of the clips between adjacent frames. Fulldit shows significantly better dynamics compared to concept masters, so smoothness metrics are influenced by large variations between adjacent frames.

“For aesthetic scores, the rating model prefers painting styles and ControlVideo images, so it usually produces videos in this style, resulting in a high score in aesthetics.”

As for qualitative comparisons, it may be desirable to refer to sample videos from the Fulldit project site, as PDF examples are inevitably static (and also too big to fully reproduce here).

The first section of qualitative results of PDF. See the source paper for additional examples. It’s too wide so that it can’t be replicated here.

Author’s comments:

‘fulldit demonstrates the preservation of a superior identity and produces videos with improved dynamics and visual quality compared to (conceptmaster). This emphasizes the effectiveness of state injection with full attention, as Conceptmaster and Fulldit are trained on the same backbone.

‘… (other) results show excellent controllability and production quality from existing depth to video to video. ”

A section of examples of PDF output for Fulldit with multiple signals. See the source paper and project site for additional examples.

Conclusion

Fulldit is an exciting foray into a more full-featured type of video foundation model, but we need to wonder whether the demand for ControlNet-style means justifies implementing such features at scale, at least for the FOSS project.

The main challenge is that using systems such as depth and poses generally requires a relatively complex user interface, such as Comfyui, and non-critical knowledge. Therefore, functional FOSS models of this kind seem most likely to be developed by executives of small VFX companies, given that such systems are quickly becoming obsolete due to model upgrades, in order to curate and train models in closed rooms.

On the other hand, API-driven “Rent-An-AI” systems may be well motivated to develop simpler and user-friendly interpretation methods for models in which auxiliary control systems are directly trained.

Click to play. Depth + Text controls imposed on video generation using Fulldit.

* The author does not specify a known base model (i.e. SDXL, etc.).

First released on Thursday, March 27th, 2025